Introduction: Why Technical SEO Matters More Than Ever

Imagine crafting a stunning website with compelling content, sleek visuals, and a well-planned user journey yet no one can find it. This is what happens when technical SEO is overlooked.

Technical SEO is the foundation of your site’s visibility and performance. Without it, search engines struggle to crawl, understand, and rank your content. For web developers and digital marketers, understanding technical SEO best practices isn’t just useful—it’s essential.

This article dives deep into the technical aspects of SEO that directly influence how your website performs in search rankings. We’ll break down the most critical components like site speed optimization, mobile responsiveness, schema markup, crawlability, and XML sitemaps in a way that’s both easy to follow and actionable.

What Is Technical SEO?

Technical SEO refers to optimizing your website’s infrastructure to ensure search engines can crawl, interpret, and index it properly. It’s the “behind-the-scenes” work that makes a website accessible and understandable to search engines like Google.

While on-page SEO focuses on content and keywords, and off-page SEO involves backlinks and authority, technical SEO makes sure all that effort actually pays off.

A technically optimized website is fast, mobile-friendly, secure, and structured in a way that makes it easy for both users and search engines to navigate. In essence, technical SEO is what enables everything else to work.

Site Speed Optimization: Your First Technical Priority

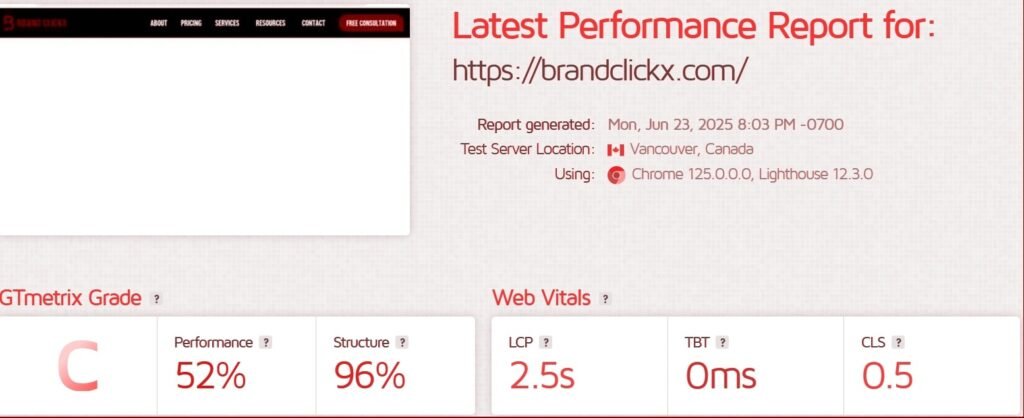

When it comes to SEO, speed is everything. Google has made it clear that page speed is a ranking factor. But beyond that, speed directly affects user experience. A slow-loading website increases bounce rates and lowers conversions.

Tools to Measure Site Speed:

- Google PageSpeed Insights

- GTmetrix

- Lighthouse

- WebPageTest

Actionable Best Practices:

- Minimize HTTP Requests

Reduce the number of elements on your page (scripts, images, CSS files) that require requests to the server.

- Optimize Images

Use next-gen formats like WebP, compress large files, and use responsive image tags (srcset) to serve appropriately sized images on different devices. - Enable Lazy Loading

Load images and iframes only when they enter the viewport, rather than all at once. - Minify and Combine CSS & JavaScript

Remove unnecessary spaces, comments, and code. Use tools like UglifyJS or CSSNano. - Leverage Browser Caching and GZIP Compression

Store static files locally in users’ browsers and compress files before sending them to reduce load time.

Faster sites lead to better rankings and happier users, making speed optimization a non-negotiable part of technical SEO best practices.

Mobile Responsiveness: Technical SEO Starts on the Small Screen

Mobile traffic dominates the internet, and Google now uses mobile-first indexing, meaning the mobile version of your site is what gets crawled and ranked.

How to Test Mobile Responsiveness:

- Google’s Mobile-Friendly Test

- Chrome DevTools (Responsive View)

- BrowserStack for device simulations

Best Practices for Mobile Optimization:

- Responsive Design: Use fluid grids and flexible images that adjust to any screen size.

- Readable Fonts and Tap Targets: Ensure font sizes are legible and buttons/links are easy to click on smaller screens.

- Prioritize Mobile Page Speed: Mobile users are often on slower connections. Use AMP (Accelerated Mobile Pages) where necessary.

A mobile-optimized site ensures your users have a seamless experience and keeps your SEO intact in a mobile-first world.

Crawlability: Ensuring Search Engines Can Find You

If your site can’t be crawled, it can’t be indexed. That means it can’t rank. Crawlability is the technical SEO feature that ensures search engines can navigate your website.

Key Elements to Control Crawlability:

- Robots.txt File

This file tells search engines which pages they can or cannot crawl. Be careful—one incorrect directive can block your entire site. - Crawl Budget Management

Each site gets a certain “budget” of pages Googlebot will crawl during a session. Optimize your crawl budget by reducing unnecessary pages (thin content, duplicate pages) and improving site structure. - Fix Crawl Errors

Use Google Search Console to identify 404 errors, redirect loops, or server errors. Regularly fix and monitor them. - Strategic Internal Linking

Helps search engines discover new content and better understand your site hierarchy.

- Clean, Logical URL Structure

Avoid complex parameters. Use short, descriptive URLs that reflect your site’s architecture.

Improving crawlability is one of the simplest yet most powerful technical SEO best practices you can implement.

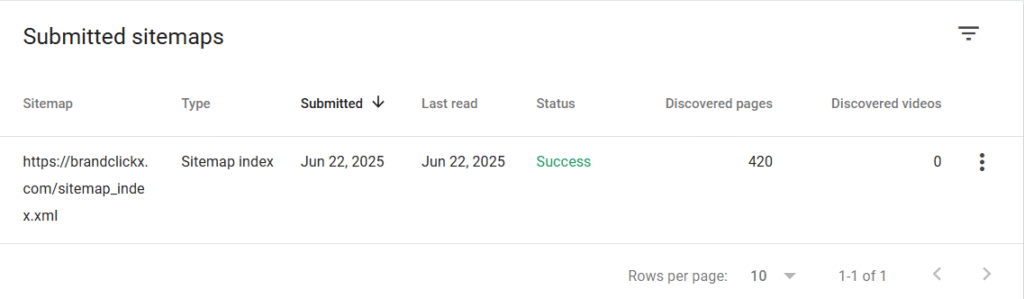

XML Sitemaps: A Map for Search Engines

An XML sitemap is like a roadmap for search engines, showing them which pages to crawl and how they are connected.

Why It Matters:

- It ensures all important pages are discoverable

- Helps with the indexing of large or complex websites

- Can highlight the last updated date and importance of pages

Best Practices for Sitemaps:

- Only Include Indexable Pages

Avoid adding pages that are blocked by robots.txt or marked noindex.

- Keep It Updated

Automate your sitemap with your CMS or plugins to reflect real-time changes. - Submit to Search Engines

Use Google Search Console and Bing Webmaster Tools to submit and validate your sitemap.

An optimized XML sitemap works hand-in-hand with crawlability to improve search engine access and indexation.

Schema Markup: Speak Google’s Language

Schema markup is a form of structured data that helps search engines understand your content in a more meaningful way.

Why Use Schema?

- Enables rich results like review stars, FAQs, and event data

- Boosts CTRs by enhancing SERP visibility

- Helps with voice search optimization

Types of Schema You Should Use:

- Article

- Product

- Local Business

- Breadcrumb

- FAQ

- Review

How to Add Schema:

- Use Google’s Structured Data Markup Helper

- WordPress plugins like RankMath or Yoast

- Add directly in HTML using JSON-LD format

Testing Tools:

- Google Rich Results Test

- Schema.org validator

Schema isn’t required to rank, but it greatly enhances your site’s ability to stand out in the crowded SERPs.

HTTPS and Site Security: A Trust Signal for Google

Google has confirmed that HTTPS is a ranking signal, and more importantly, users expect a secure experience.

How to Secure Your Site:

- Install an SSL Certificate

Use providers like Let’s Encrypt or purchase one from your host. - Redirect HTTP to HTTPS

Set up a 301 redirect to avoid duplicate content issues. - Fix Mixed Content

Ensure all resources (images, scripts, styles) are loaded over HTTPS to prevent browser warnings.

Security is not only vital for SEO, but also for user trust and compliance with data regulations.

Technical SEO Audits: Keeping Your Site in Check

A technical SEO audit is like a health check-up for your website. It identifies issues that could be hurting your rankings or user experience.

Tools for Auditing:

- Screaming Frog SEO Spider

- SEMrush Site Audit

- Ahrefs Site Audit

- Google Search Console

- GTmetrix

What to Check in a Technical Audit:

- Mobile usability

- Page speed

- Sitemap and robots.txt validity

Regular technical audits are one of the most effective technical SEO best practices for maintaining a healthy and high-performing website.

Common Technical SEO Mistakes (and How to Fix Them)

Even experienced developers and marketers make technical SEO mistakes. Here are some common ones to avoid:

- Slow Page Load Times: Caused by unoptimized images or bloated code.

- Broken Internal Links: Regularly scan your site for 404s and fix them.

- No Alt Text for Images: This affects both accessibility and SEO.

- Poor Mobile Experience: Failing mobile tests can cost you rankings.

- Unoptimized Robots.txt: Don’t accidentally block important pages.

- Duplicate Content: Use canonical tags where needed.

- Orphaned Pages: Ensure all pages are linked internally.

Fixing these issues can have a quick and noticeable impact on your SEO performance.

How BrandClickX Can Help

Whether you’re a marketer overwhelmed by technical details or a developer focused on execution, having the right SEO partner makes all the difference.

t BrandClickX, we specialize in the kind of detailed technical SEO that drives measurable performance. From improving site speed to managing structured data and technical audits, our services are tailored for businesses that understand the value of being found online.

If you’re ready to take your technical SEO to the next level, our team is here to support you—quietly, efficiently, and effectively.

Conclusion: Technical SEO Is Never “One and Done”

Technical SEO is not a one-time fix—it’s an ongoing strategy that evolves with your website and search engine algorithms. From ensuring fast load times to making your site mobile-friendly and secure, mastering technical SEO best practices is what separates high-performing websites from the rest.

For web developers, it means building with scalability and optimization in mind. For marketers, it’s about working alongside technical teams to ensure visibility and usability.

If your site isn’t performing as expected, it may be time to review the technical foundation. And if you need help, BrandClickX is always ready to assist—no noise, just results.

FAQs

What are the top technical SEO best practices for 2025?

Some of the most important include optimizing site speed, ensuring mobile responsiveness, implementing schema markup, maintaining clean crawl paths, and securing your site with HTTPS.

How does site speed optimization impact SEO?

Google uses page speed as a ranking factor. Faster pages provide a better user experience, reduce bounce rates, and improve conversion rates—all of which support better search visibility.

Why is schema markup important for search visibility?

Schema helps search engines understand your content better and enables rich results like ratings, FAQs, and event details that make your listings more attractive in SERPs.

How can I test my website’s crawlability?

Use tools like Screaming Frog, Ahrefs, and Google Search Console to simulate and analyze how search engines crawl your site and identify any barriers.

Do I need both an XML sitemap and a robots.txt file?

Yes. A sitemap helps search engines discover your content, while robots.txt controls what they can or can’t access. They serve different purposes and should work together.